Over the past several months, tech companies such as Google and Microsoft have been busy working on new customer experiences surrounding chatbots. In a nutshell, using a modern chatbot is similar to using a customer service chat bubble that you might find at the lower corner of a website. But instead of using live humans or a small range of answers, the process is entirely automated with a large source of information—this results in something called “generative AI”, a tailor-made answer created by a computer service.

This new technology fetches information to perform various tasks surrounding questions. For example, this could look like asking, “What was the most popular style of mens' sunglasses in the year 2000?”

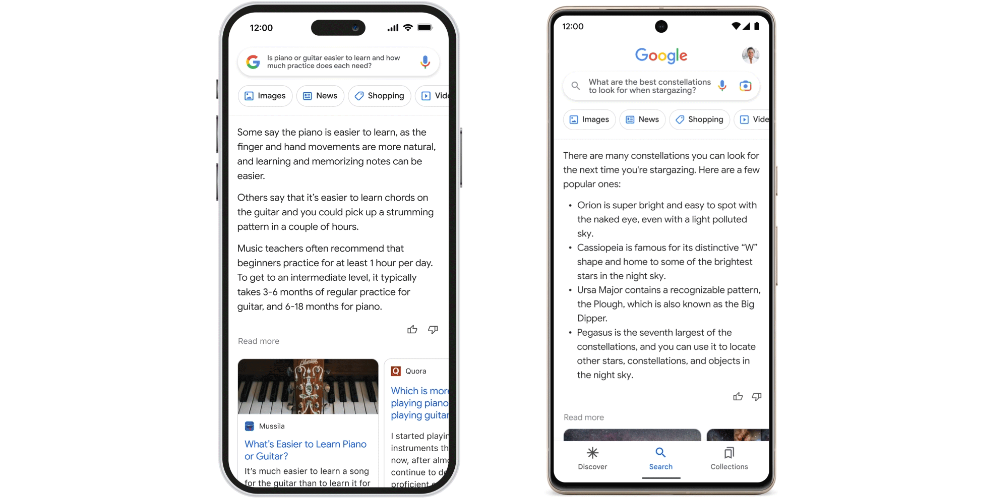

Image: Google

Google and Microsoft have been busy developing chatbot technology to complement their search engine products. Microsoft, in creating their solution in the form of Bing Search, have teamed up with OpenAI—this is the company that creates the ChatGPT engine. Google, on the other hand, have opted to develop their own solution called Language Model for Dialogue Applications (LaMDA). Google have called their chat product Bard.

Bard seeks to combine the breadth of the world’s knowledge with the power, intelligence and creativity of our large language models. It draws on information from the web to provide fresh, high-quality responses. Bard can be an outlet for creativity, and a launchpad for curiosity, helping you to explain new discoveries from NASA’s James Webb Space Telescope to a 9-year-old, or learn more about the best strikers in football right now, and then get drills to build your skills.

Answering questions in a smarter way is certainly helpful. But are there any risks involved for young people, especially when taking into consideration that this technology is new?

Taking a closer look

When a chatbot answers a question, it’s helpful to keep in mind that the developers of the chatbot have designed it to be as helpful as possible. The content it serves will depend on the following factors:

- The data that the chatbot has been trained with

- Any fail-safes that have been put in place

The data could come from multiple sources, such as books, general sources of information online (such as Wikipedia), and news articles. Chatbots could also have certain fail-safes in place, such as stopping the chatbot from answering inappropriate questions.

With the nature of newly emerging technology, it’s possible that chatbots like Bard could serve inappropriate content. According to The Verge, Microsoft’s Bing chatbot (in its early stages of public testing) accidentally discussed “furry porn” with a user, without going any further than this. This case is an outlier, especially given how Microsoft hasn’t broadly released its Bing chatbot, but it remains to be seen how content treated as “acceptable” will be used for answering questions in the future.

We would like to see more information regarding the sources that OpenAI and Google use for their data sets. The developers behind the open-source large language model called BLOOM openly discuss the general sources of their data.

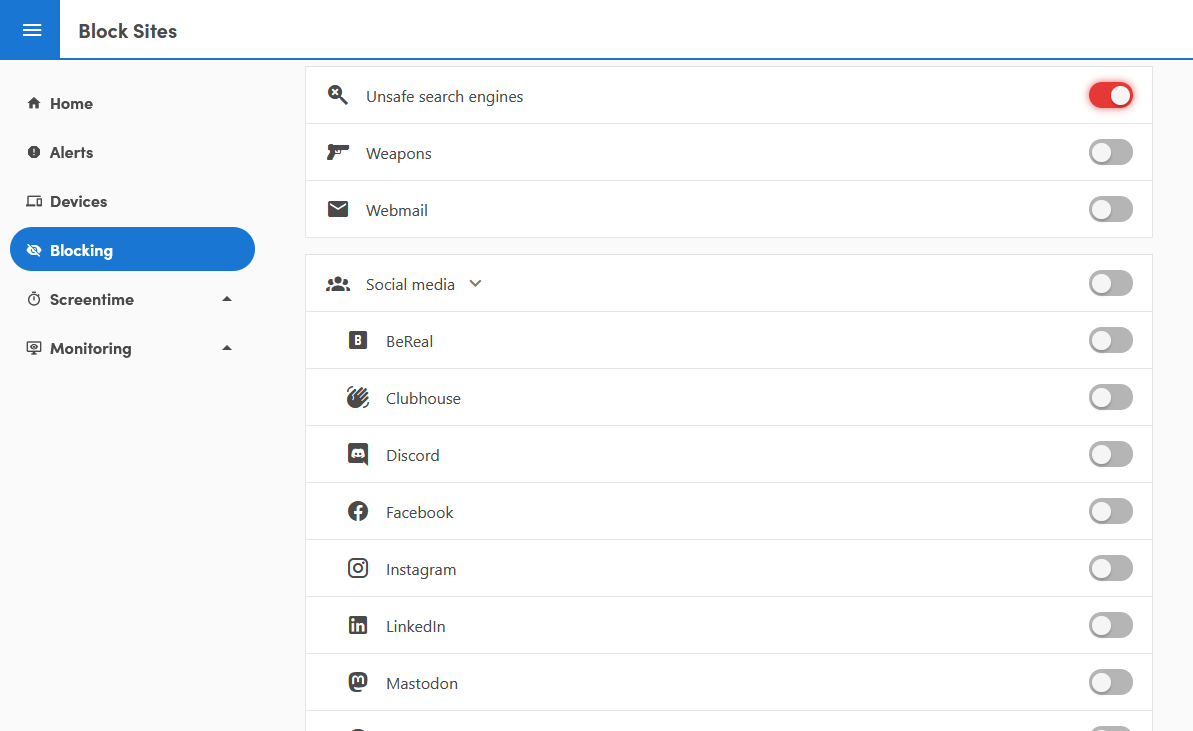

Get protected

Are you concerned about chatbots linking to porn websites? We can help! Safe Surfer enables you to provide a remarkably clean online surfing experience for your family. All devices that use Safe Surfer have SafeSearch enforced—we use this to help hide inappropriate content from appearing in search engines like Google and Bing. In addition to blocking millions of porn domains, we provide you with the ability to block unsafe search engines that don’t support enforcing SafeSearch. Find out more by clicking this link.