Update 18 August 2023: since this article was originally published in May 2022, we suspect that Google have since made at least some improvements to the content that gets fed through YouTube Shorts to devices with YouTube restrictions applied. This highlights our concern with regard to the technology sector where new technologies and experiences for consumers can be released at the expense of safety. We think that safety should be by design, rather than a later addition.

Over the past few weeks, Safe Surfer has performed research on Google’s latest YouTube feature: YouTube Shorts. We are disturbed by what we found, how easy it is to access, and how the algorithm works in presenting content to users who look at inappropriate videos.

Warning: this article is intended for parents and discusses mature topics.

Having been released to the public in July 2021, YouTube Shorts is a TikTok-style competitor in the looping video (or “reels”) market. Videos are no longer than 60 seconds, loop after finishing, and are scrolled through quickly. Currently undergoing an increase in marketing exposure, Shorts can be found inside the YouTube app as a prominent feature on the navigation bar. According to Google in its 2022 Q1 earnings call,

YouTube Shorts is now averaging over 30 billion daily views—that’s four times as much as a year ago.

YouTube is available to use from the get-go on a majority of Android devices. This includes various smartphones and tablets on the market. Google claimed at its 2022 developer conference that over 3 billion Android devices were active in the past month.

The algorithm behind YouTube operates on an interest-based model to encourage the spending of time on the platform. If you watch several videos regarding Obi-Wan Kenobi, a character from the Star Wars franchise, YouTube will likely add a keyword (that is, a topic) to your account as an “interest”. Content in your recommended feed is comprised of videos derived from this interest list (alongside other content, such as videos you may view on a regular basis). The Shorts feature acts in much the same way.

In Shorts, if you don’t swipe past (or perhaps swipe back to) videos from a certain channel, you will more than likely be shown further videos from the same channel and/or topic. This is part of the process behind profiling viewers and encouraging use of the platform. There’s a particular catch to this—a scenario could involve an individual watching a number of video shorts related to a potentially unsavoury topic, perhaps “relationships”. From a channel that frequently posts soft-core porn shorts, many more videos are only seconds away—just a few taps.

Beginning our investigation

Besides from the addictive nature of Shorts, we found it to have a concerning lack of moderation. This was our starting environment after having created a new Android device in Google’s Android Studio software development kit:

- To avoid any bias in the presentation of content, we created a new Google account as a “13-year-old male”, signing in to the device as someone who had never used YouTube before.

- To avoid unsavoury content, we went out of our way to enable Restricted Mode, which is tucked away in the settings of the YouTube app. According to YouTube, this “helps hide potentially mature videos”.

Swipe up for porn

Officially, Google prohibits pornographic videos from being uploaded to the YouTube platform. This is described as any of the following content:

Depiction of genitals, breasts or buttocks (clothed or unclothed) for the purpose of sexual gratification … depicting sexual acts, genitals or fetishes for the purpose of sexual gratification … dramatised content such as sex scenes …

But as we have detailed below, the level of content enforcement in Shorts is unacceptable. Even with Restricted Mode enabled, we found inappropriate content with ease—and it took mere minutes.

Here is a small selection of what we found during our examination:

- A television scene depicting a naked couple having sexual intercourse (127K likes)

- A man and a woman inside a bathroom, with the man supposedly “shaving” the woman’s genitals (20K likes)

- A man and a woman simulating sexual intercourse (26K likes)

- A man and a woman lying on a bed, demonstrating various “cuddling positions” (360K likes)

- A woman lying on a bed, displaying her cleavage to the camera (201K likes)

Not only is this widely-viewed content in direct violation of YouTube’s upload policy, but all these video shorts could be viewed by anyone as young as 13 years old—the age you are allowed to create a Google account and use YouTube for yourself (with the permission of your parents). YouTube is classified as a “12+” app on the Google Play Store. One of these shorts had hashtags applied surrounding current events (#Russia and #Putin)—this has the potential to make a video short easier to find.

All things considered, YouTube’s Restricted Mode did little to protect us from soft-core porn in Shorts. And this is not all we found.

Swipe up for phishing

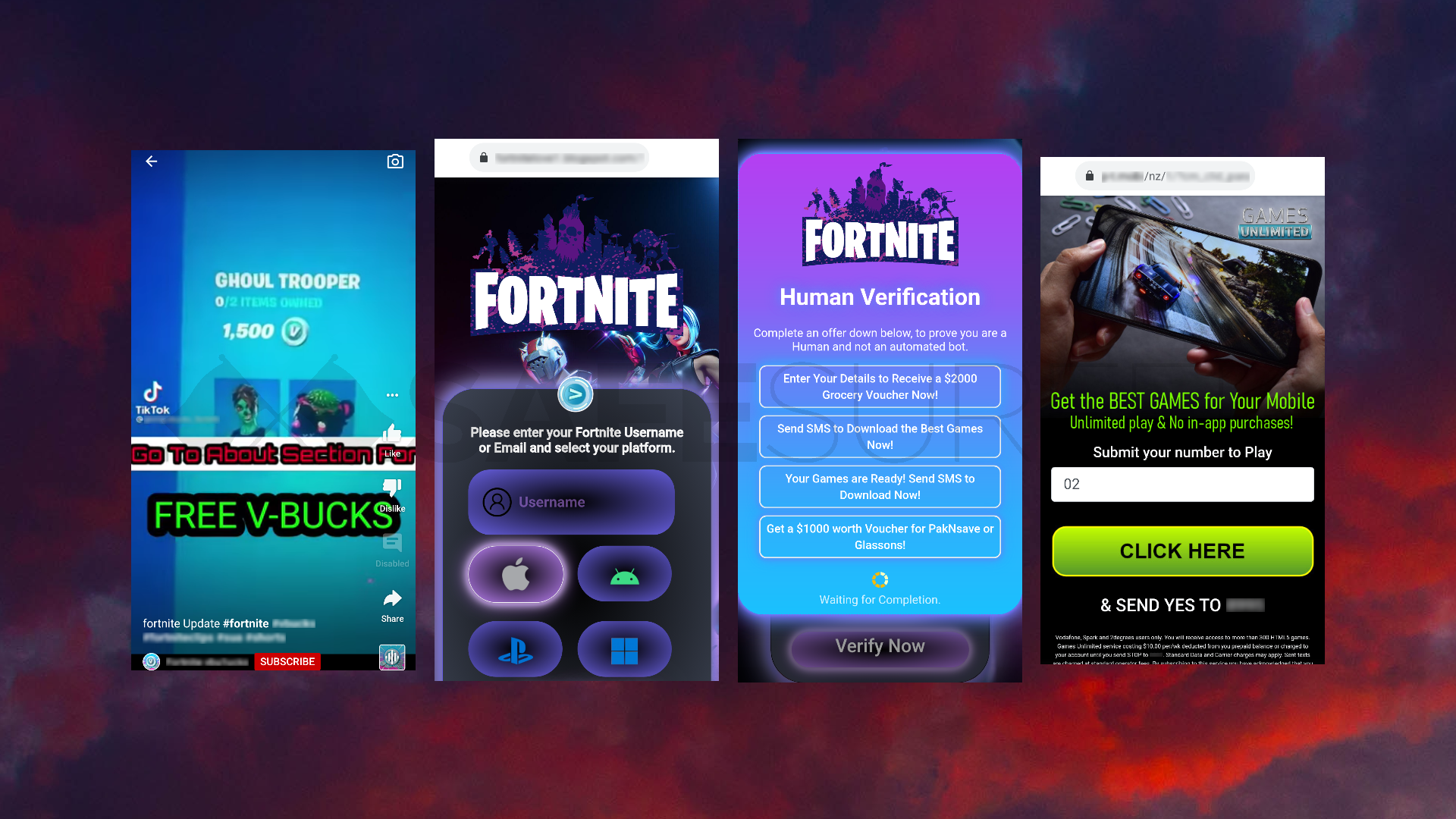

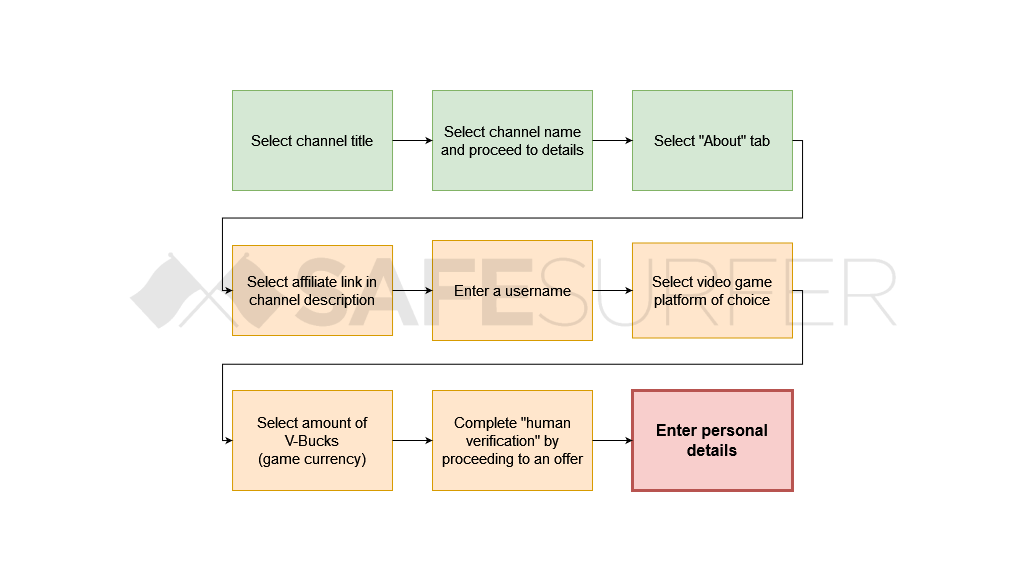

Safe Surfer discovered on YouTube Shorts the presence of an affiliate scheme harvesting information from audiences interested in certain video games, such as Fortnite. After doing a little digging, an amount of these affiliate-based shorts exist that target video gamers.

This is a particular technique where victims are led out of the YouTube app to a website made by an affiliate of a phishing scheme. YouTube did not warn us before we left the app to visit an external website. The “human verification” feature on the affiliate website gives victims several options—each one will end in the collection of some kind of personal information. The scheme detected our IP address as originating from New Zealand, so each option redirected us to a separate website tailored for a Kiwi audience. One option asked us for various personal details, such as a home address.

In one example (as per the fourth screenshot in the image above), you are asked to enter a New Zealand mobile number registered with either Vodafone, Spark, or 2Degrees—all local service providers. The fine print on this website states that you will end up making a $10.00 NZD/month subscription for the “service” of games made with HTML 5. These games would usually be free.

In another example, you are led to receive a high-value voucher to use at PAK’nSAVE or Glassons—both of these are retail businesses operating in New Zealand.

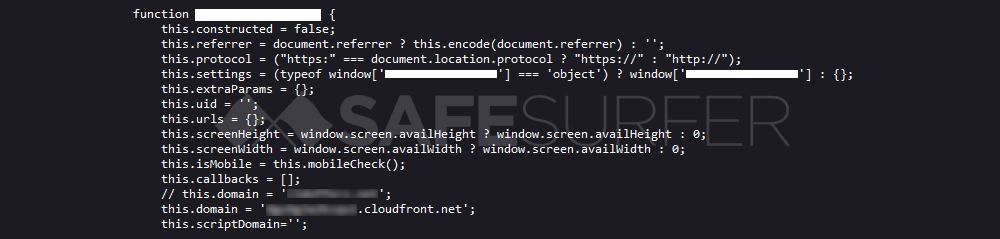

When you proceed to verify your video game currency request, the scheme retrieves and runs a JavaScript file. Here is a sample of code from the file:

This piece of code operates to “lock content”—that is, the user must complete a task before they can supposedly be rewarded. We have designed Safe Surfer to block new threats such as this as soon as we have discovered or been made aware of them. Having classified these domains, we have automatically blocked this scheme for all devices protected with Safe Surfer worldwide.

The phishing system runs on the Amazon CloudFront CDN (content delivery network; this is a legitimate service). The scheme uses a custom link system to direct the victim to the correct offer, and this will potentially end in the affiliate being rewarded:

This link holds various information, such as:

- The offer in question that the victim is being led to.

- Whether or not the victim is using a mobile device.

- A random identifier (that is, essentially a tracker in this situation) assigned to the victim—this could end up in a database for targeted use in the future.

Conclusion

In publishing this article, we don’t wish to create a “panic culture” around YouTube. But we do think the very nature of Shorts is risky for young people. We are calling on Google to improve the content enforcement of YouTube’s Restricted Mode, along with adding an option to its network control settings to disable the Shorts feature (including video listings marked for Shorts).

Safe Surfer is an innovative software company based in Tauranga, New Zealand. We’re passionate about using the very latest technology behind-the-scenes to give you the ability to create a remarkably clean online surf experience. Visit the Safe Surfer dashboard today to protect your devices from pornography, phishing attacks, and other harmful online content.